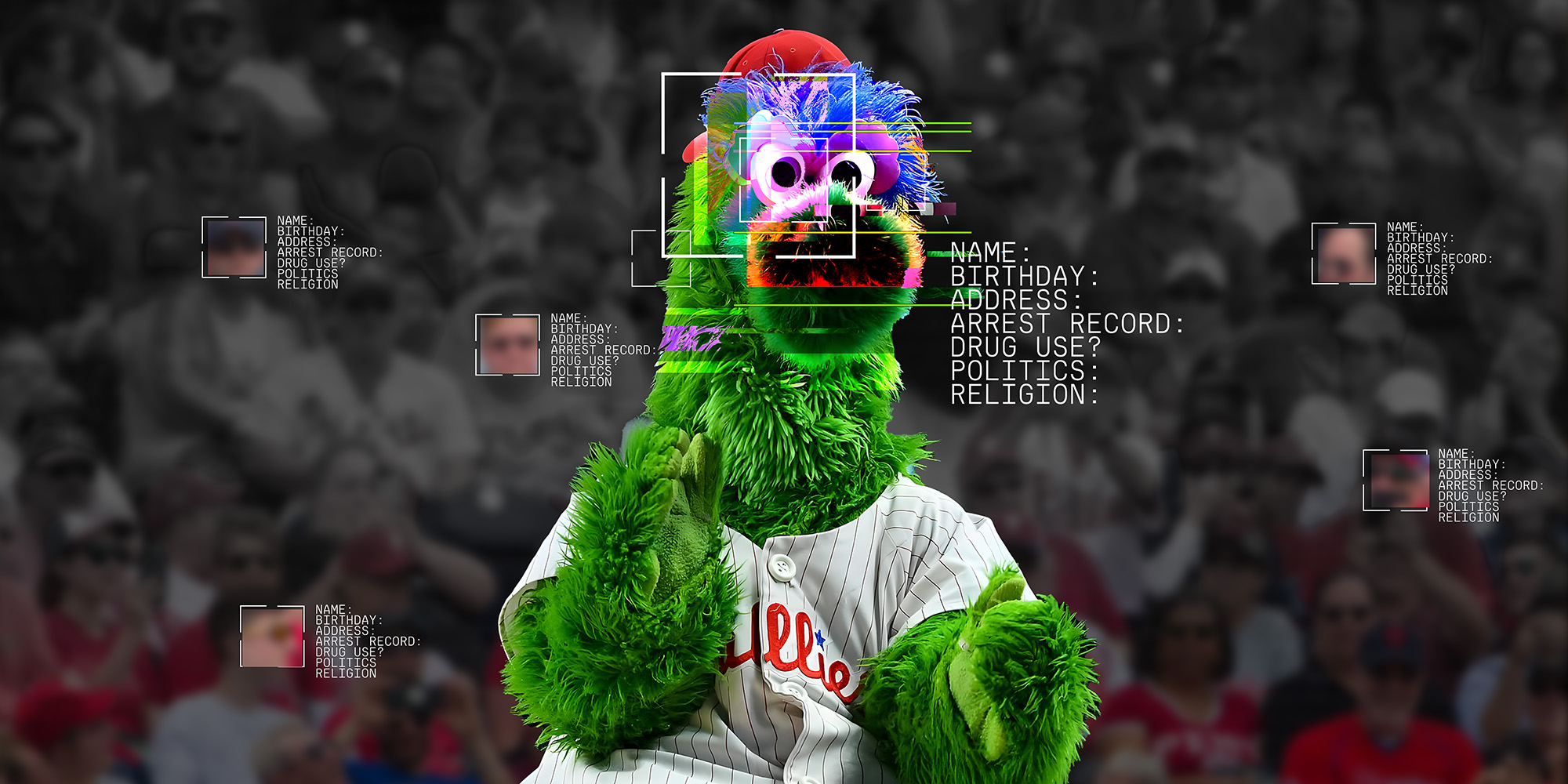

No Facial Recognition at Stadiums!

We’re calling foul: facial recognition threatens our safety and privacy and has no place in sports stadiums. We are rights defenders and privacy experts and we demand that major sports leagues, including the MLB, as well as team owners and stadium management companies immediately reject the implementation of face surveillance technology and any other biometric surveillance tools.

Sign the open letter to oppose the spread of facial recognition at stadiums!

To Major League Baseball, team owners, venue owners, sports leagues, and all leaders in professional sports:

On behalf of leading consumer, privacy, and civil liberties organizations, we are calling on you to protect the privacy and safety of fans, players, and workers by putting an end to the use of facial recognition and other biometric technology at sporting events and in your venues.

We were deeply disappointed by the recent launch of the MLB’s “Go Ahead Entry” facial recognition ticketing program at the Phillies stadium in Philadelphia. Facial recognition and other biometric tech has been creeping into the professional sports industry in a number of ways, including event entry, concession payments, and identifying players as they enter the locker room. While vendors advertise biometric tech as “convenient,” we must be clear that there is nothing convenient about the risks inherent in the collection of peoples’ most sensitive, personal, and unchangeable data.

Most facial recognition technology stores people’s biometric data in massive databases, and no matter what tech companies say about the safety of that data, they cannot ensure its security. Hackers and identity thieves are constantly finding ways to breach even the most secure data storage systems. When it comes to face surveillance data, the consequences of a breach can’t be overstated––unlike a credit card number, you can’t replace your face if it’s stolen.

The fact that facial recognition frequently misidentifies people of color makes its use even more dangerous. Studies have found that Asian and African American people were up to 100 times more likely to be misidentified than White men by facial recognition technology. Multiple people have already been falsely accused of––and arrested for––acts and crimes they didn’t commit as a result of facial recognition. All of them have been Black. This issue is compounded by the MLB’s privacy policy, which states that the league can hand over people’s data to law enforcement whenever they see fit. This is the type of policy that we have seen disproportionately impact folks that are frequently subjected to increased policing. As this tech spreads to more stadiums, we’ll only see more cases of traumatic misidentification impacting people of color, women, and gender nonconforming people.

The MLB and other leagues might claim that people are able to opt out of using this tech, but the promise of shorter and faster lines creates an incentive to use the facial recognition system. When people do not understand the potential risks, and are penalized with a longer wait if they don’t consent to using the tech, is that truly a fair “opt in” process? This system also unfairly disadvantages people who want to – or need to – protect their biometric data, for example because they’re undocumented and/or because they are a member of a community whose data has been weaponized against them by law enforcement.

The long term consequences of the spread of this tech are also critical to consider. As seemingly innocuous use cases proliferate, like paying for a sandwich, it creates the impression that facial recognition is “safe and normal.” That in turn makes you more likely to accept ever-more invasive uses, and gets us closer to a future in which facial recognition is everywhere, identifying us everywhere we go. Once it’s impossible to remain anonymous in public, research warns us we can say “goodbye” to fundamental freedoms like freedom of speech and “hello” to authoritarianism and widespread political repression.

To make matters worse, facial recognition ticketing is trying to solve a problem that doesn’t even exist—people can easily manage tickets on their phones or paper tickets. It’s time for sports leagues to face the reality: not only does facial recognition pose unprecedented threats to people’s privacy and safety, it’s also completely unnecessary.

In response to the demands of privacy advocates and directly impacted communities, people across sectors, including the live entertainment industry, have been mobilizing to fight the spread of this dystopian tech. Earlier this year, over 100 venues and artists signed a pledge opposing all forms of facial recognition at event venues, concert halls, and stadiums. In 2022, over 300 artists shut down palm scanning ticketing at Red Rocks, citing the same concerns about the mass collection of biometric data. Cities, counties and states have also passed strict regulations on the use of facial recognition by law enforcement, raising issues of racial discrimination and invasion of privacy.

Introducing discriminatory and broken technology takes us backwards in broad efforts to make stadiums safer spaces for everyone. A technology that is inherently unjust, has the potential to exponentially expand and automate discrimination and human rights violations, and contributes to an ever growing and inescapable surveillance state should not become a feature of sporting events. We demand all professional sports leagues take immediate action to protect fans, workers and players by keeping stadiums free of facial recognition and all biometric technologies.

Signers

Access Now

American Friends Service Committee

Amnesty International

Demand Progress

Electronic Privacy Information Center (EPIC)

Fight for the Future

MediaJustice

Muslim Advocates

PDX Privacy

The Surveillance Technology Oversight Project (STOP)

X-Lab